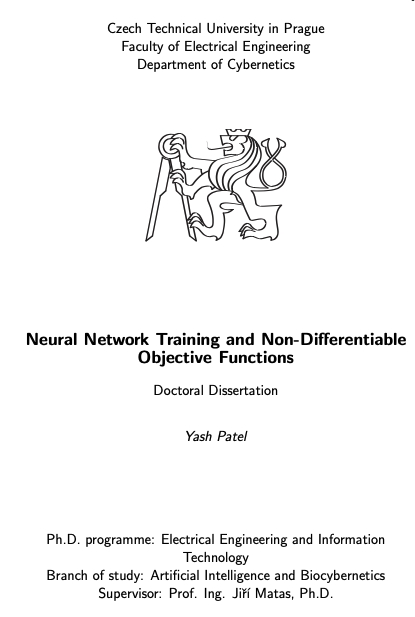

Doctoral Dissertation

Neural Network Training and Non-Differentiable Objective Functions

Yash Patel

Supervisor: Professor Jiří Matas

Ph.D. Dissertation, Czech Technical University in Prague, 2023

pdf slides abstract bibtex

Selected Publications

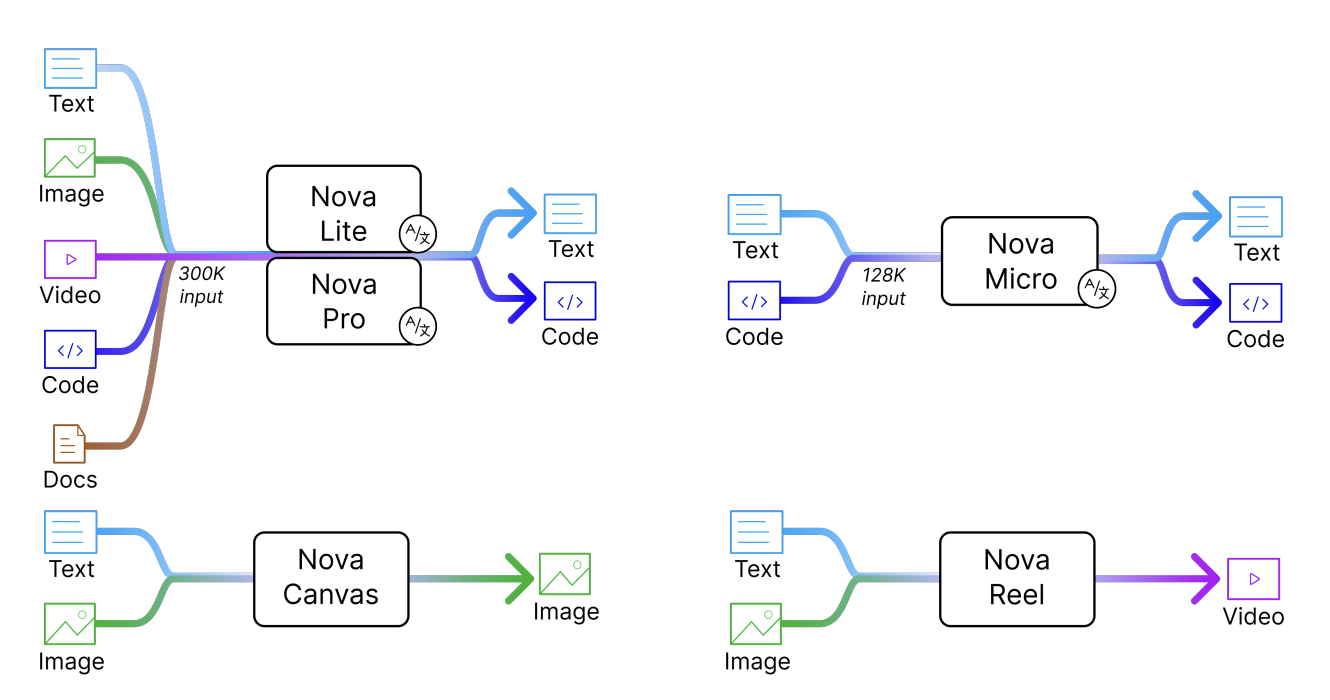

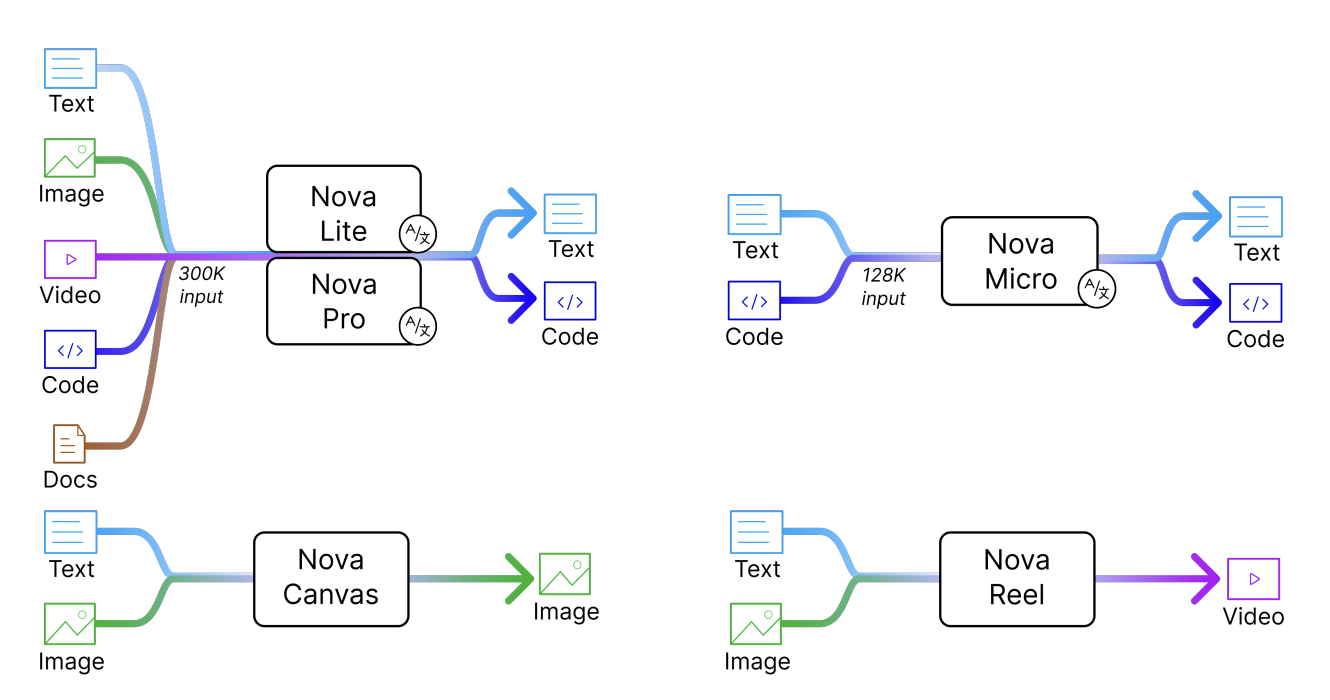

The Amazon Nova family of models: Technical report and model card

Amazon Artificial General Intelligence

Technical Reports, Amazon Science, 2024

pdf abstract bibtex models

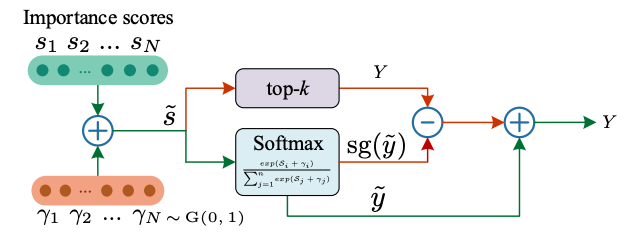

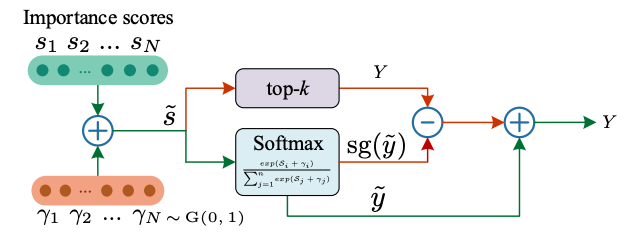

Generalized Differentiable RANSAC

Tong Wei, Yash Patel, Alexander Shekhovtsov, Jiri Matas, Daniel Barath

IEEE/CVF International Conference on Computer Vision (ICCV), 2023

pdf abstract bibtex code

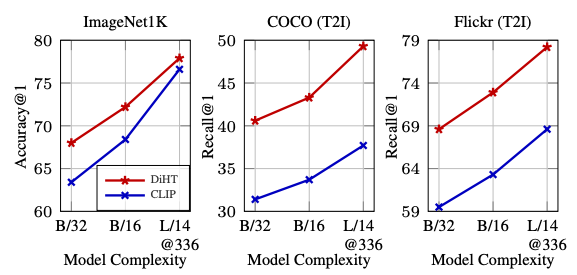

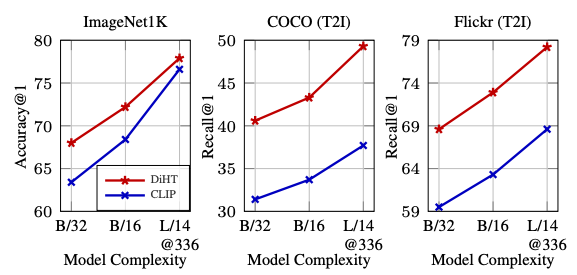

Filtering, Distillation, and Hard Negatives for Vision-Language Pre-Training

Filip Radenovic, Abhimanyu Dubey, Abhishek Kadian, Todor Mihaylov, Simon Vandenhende, Yash Patel, Yi Wen, Vignesh Ramanathan, Dhruv Mahajan

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023

pdf abstract bibtex code

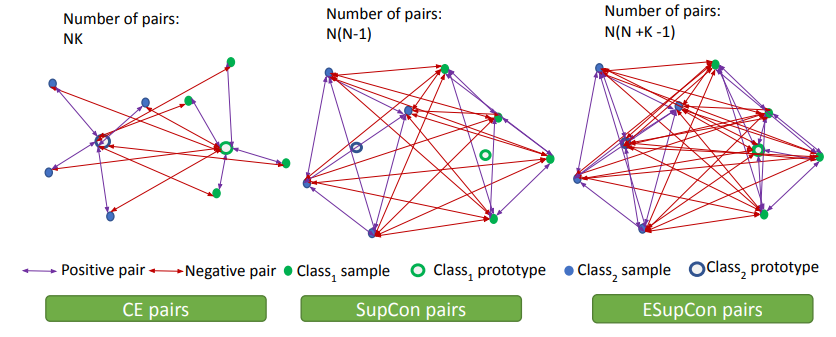

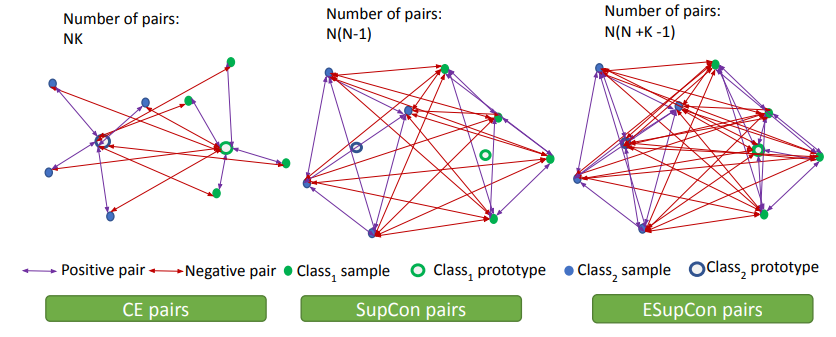

Contrastive Classification and Representation Learning with Probabilistic Interpretation

Rahaf Aljundi, Yash Patel, Milan Sulc, Daniel Olmeda, Nikolay Chumerin

Association for the Advancement of Artificial Intelligence (AAAI), 2023

pdf abstract bibtex

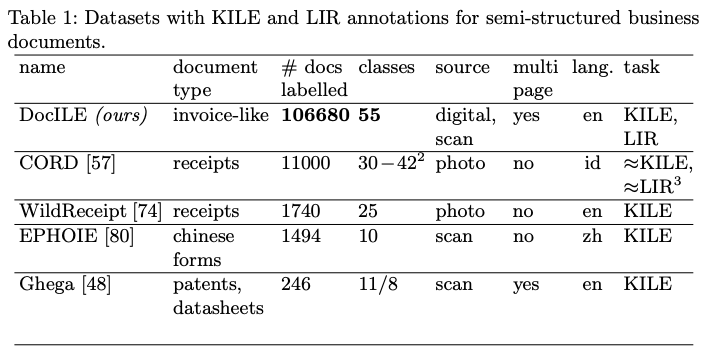

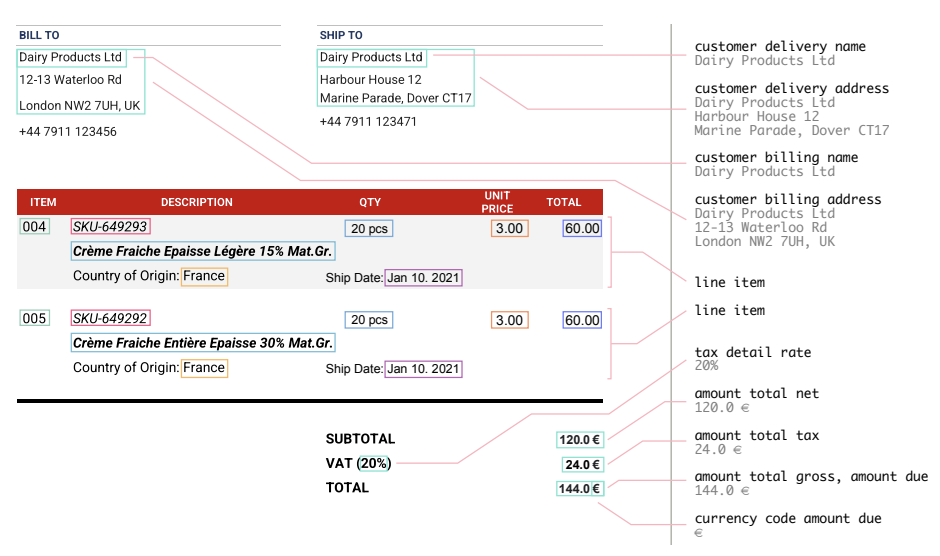

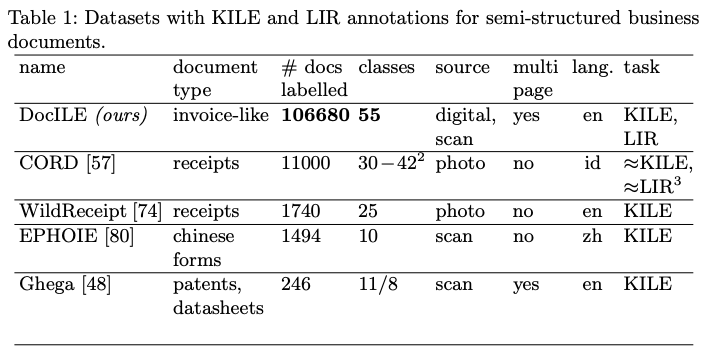

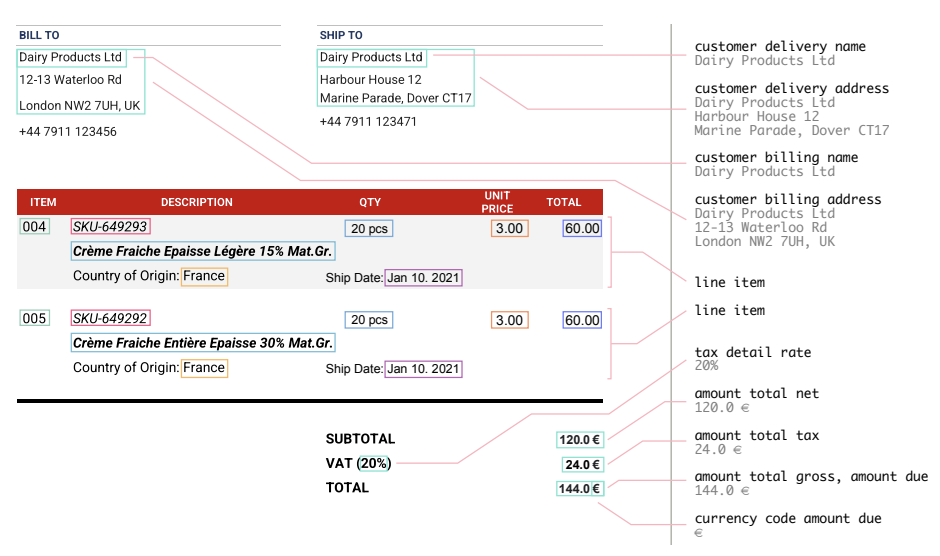

DocILE Benchmark for Document Information Localization and Extraction

Štěpán Šimsa, Milan Šulc, Michal Uřičář, Yash Patel, Ahmed Hamdi, Matěj Kocián, Matyáš Skalický, Jiří Matas, Antoine Doucet, Mickaël Coustaty, Dimosthenis Karatzas

International Conference on Document Analysis and Recognition (ICDAR), 2023 Oral

pdf abstract bibtex webpage code

DocILE 2023 Teaser: Document Information Localization and Extraction

Štěpán Šimsa, Milan Šulc, Matyáš Skalický, Yash Patel, Ahmed Hamdi

European Conference on Information Retrieval (ECIR), 2023

pdf abstract bibtex webpage

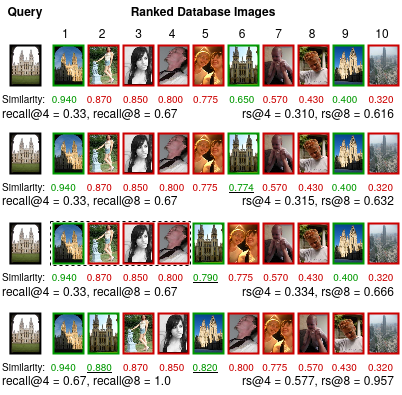

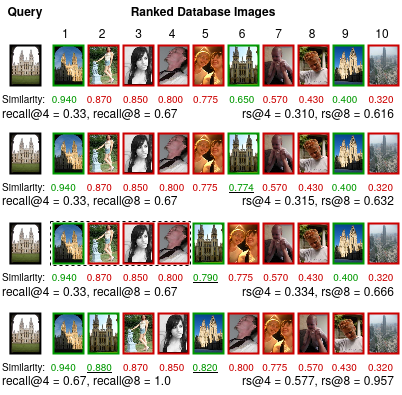

Recall@k Surrogate Loss with Large Batches and Similarity Mixup

Yash Patel, Giorgos Tolias, Jiri Matas

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022

pdf supplementary abstract bibtex webpage code video

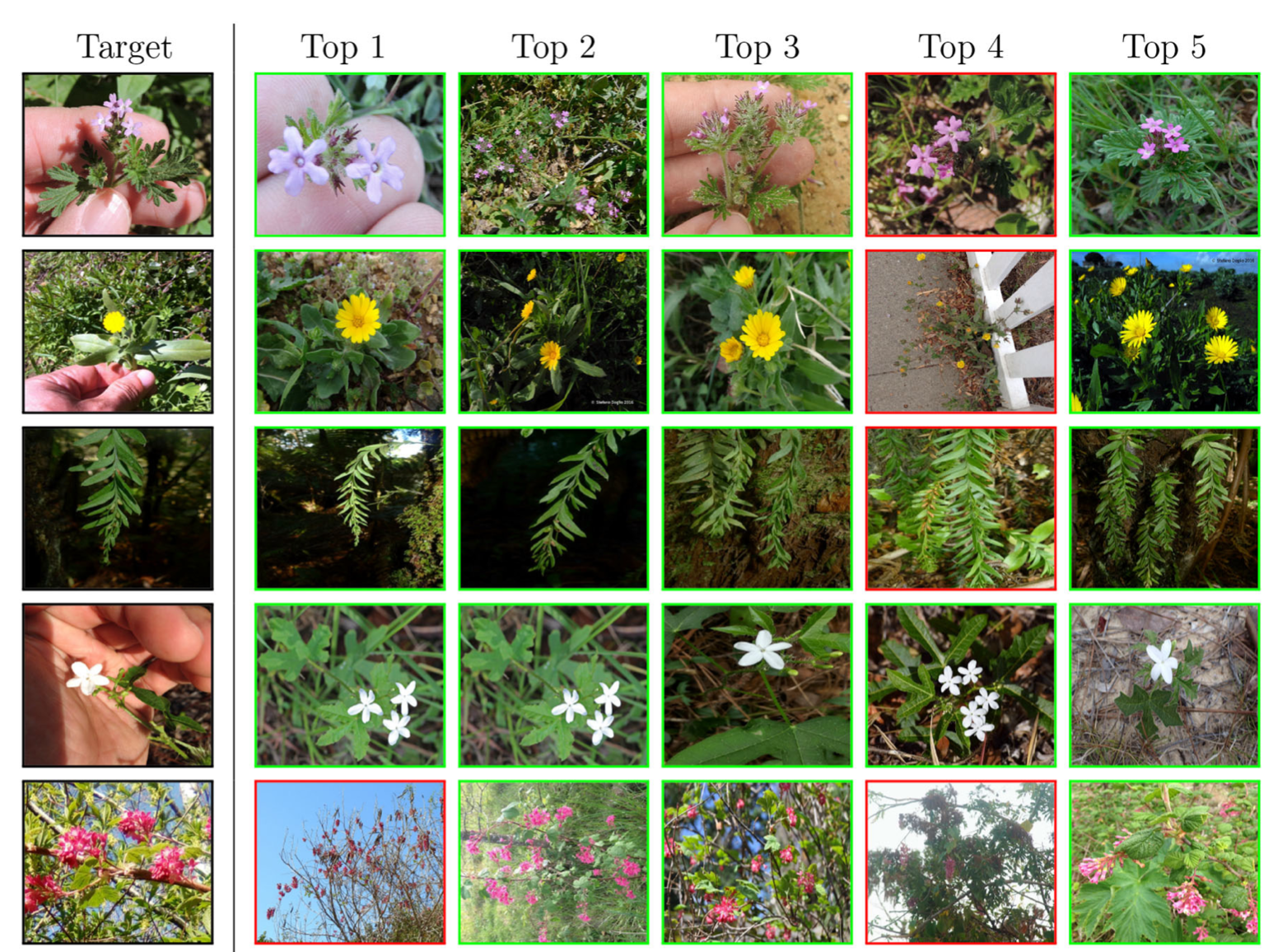

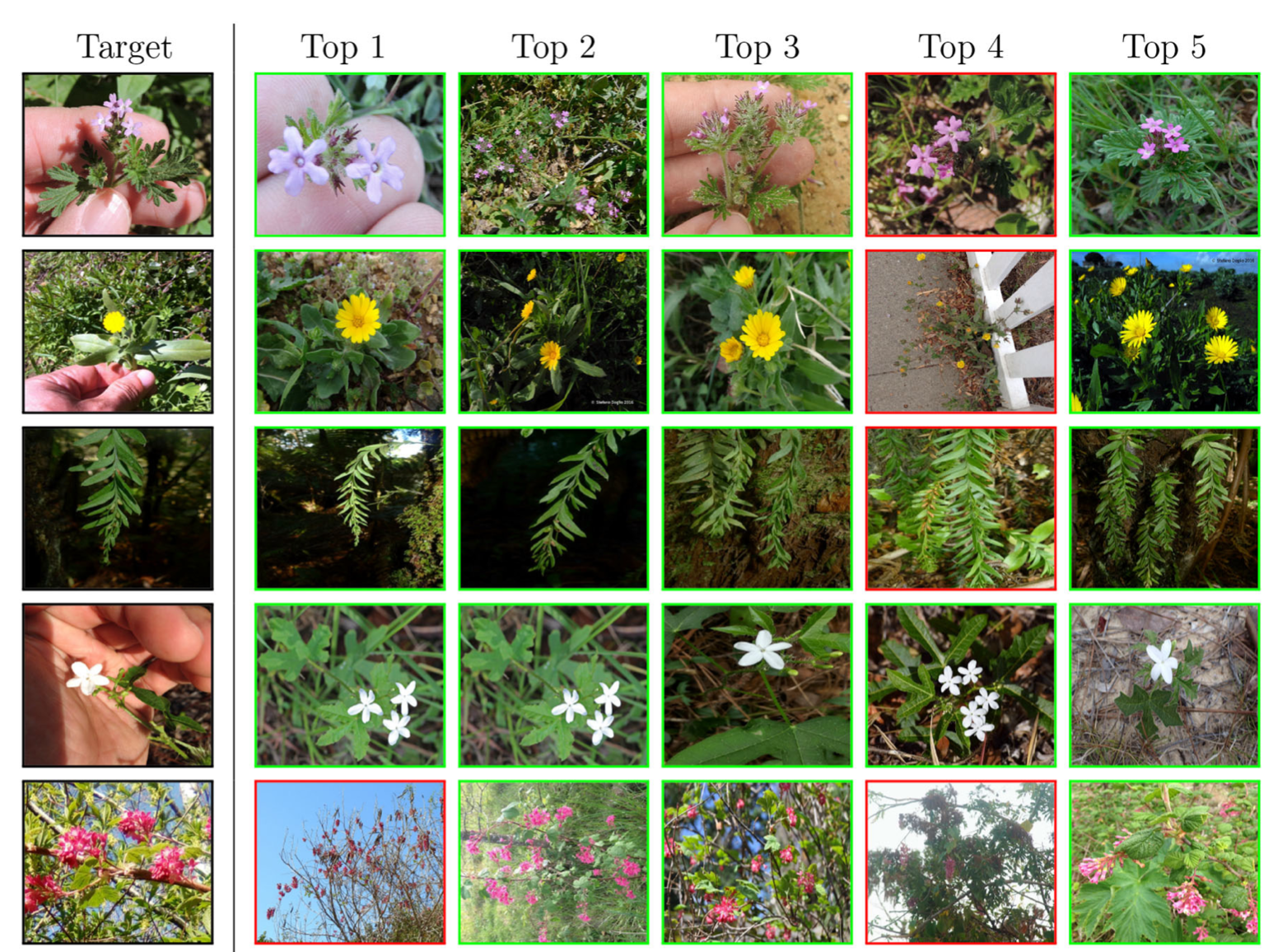

Plant recognition by AI: Deep neural nets, transformers, and kNN in deep embeddings

Lukáš Picek, Milan Šulc, Yash Patel, Jiri Matas

Frontiers in Plant Science, 2022

pdf abstract bibtex

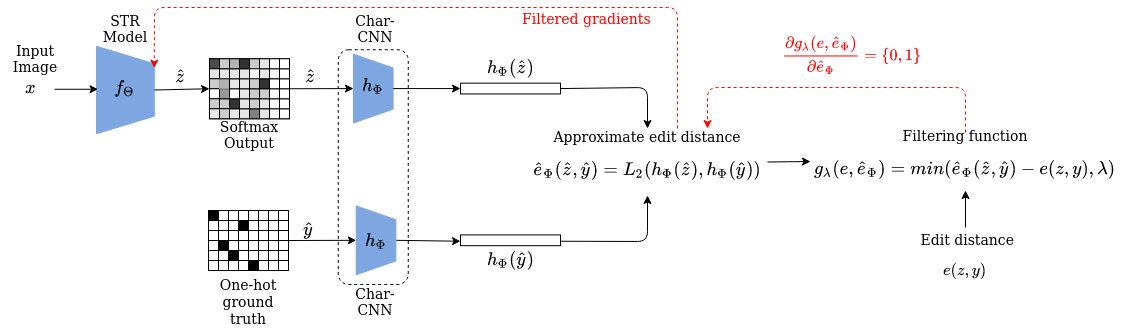

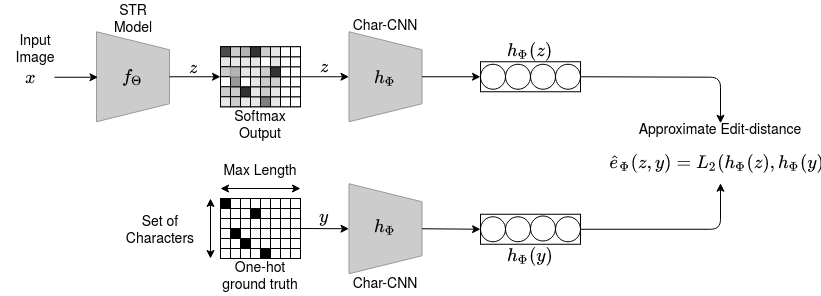

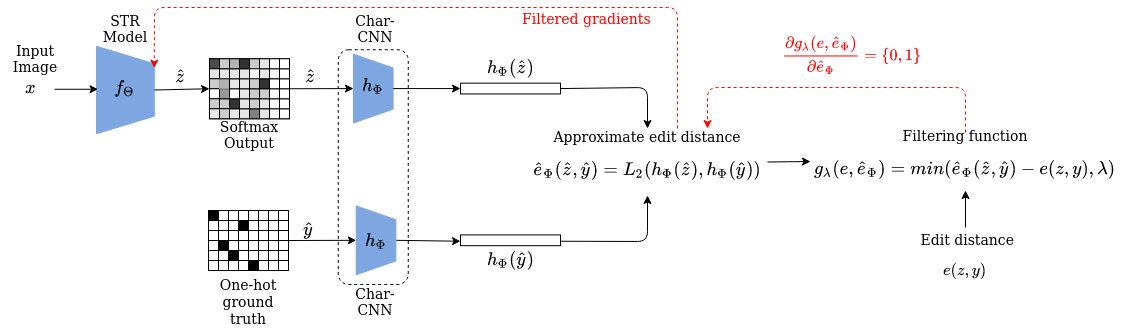

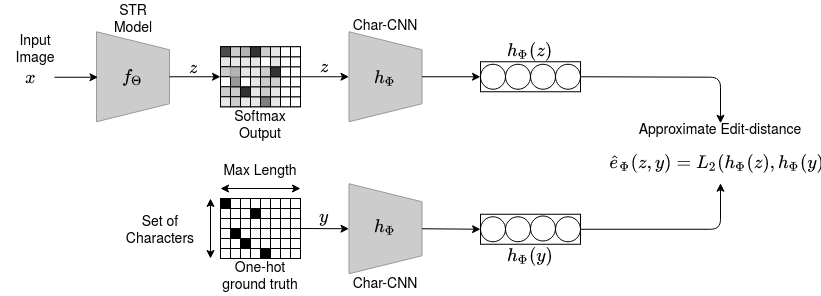

FEDS--Filtered Edit Distance Surrogate

Yash Patel, Jiri Matas

International Conference on Document Analysis and Recognition (ICDAR), 2021

pdf abstract bibtex video

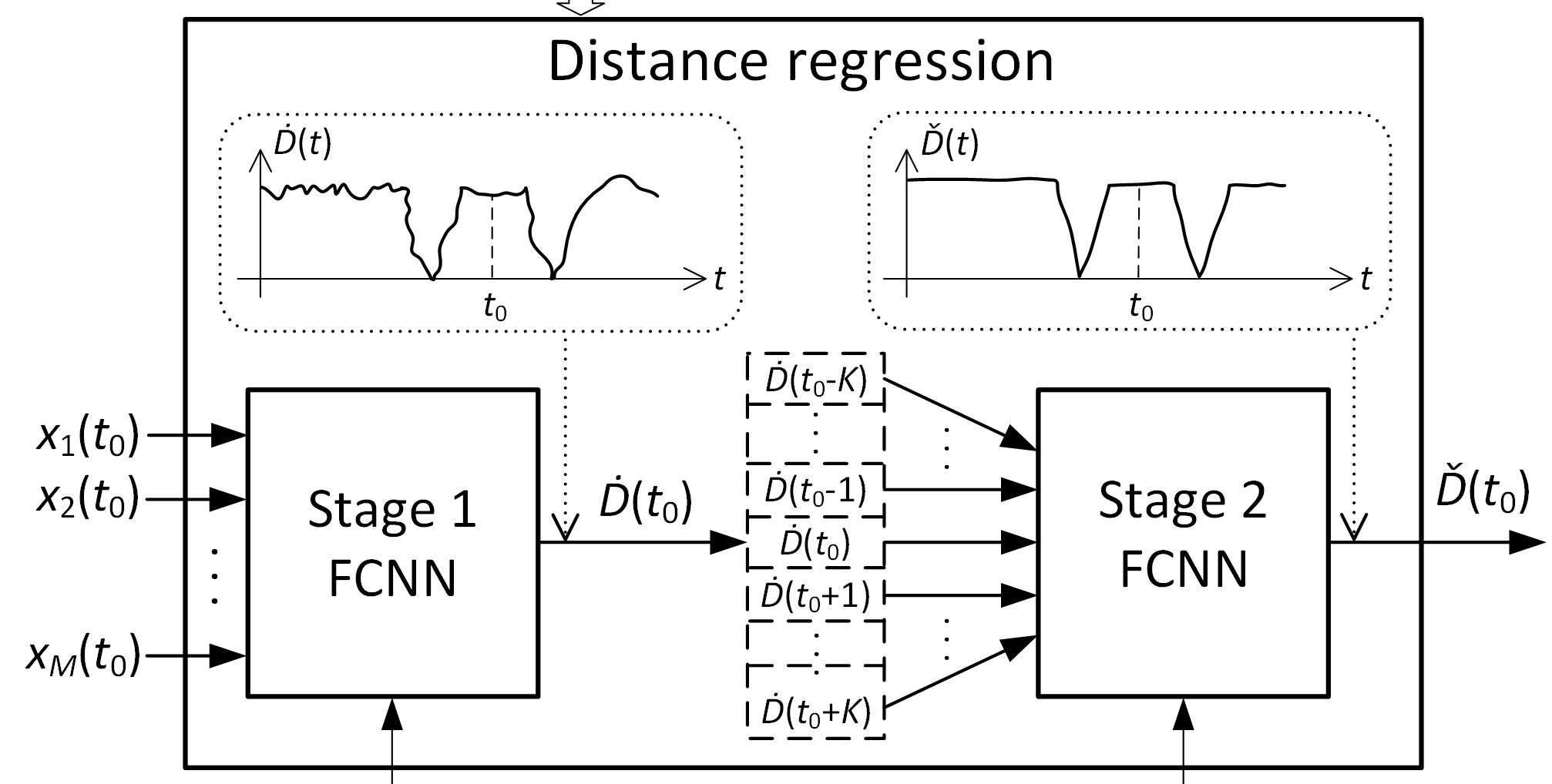

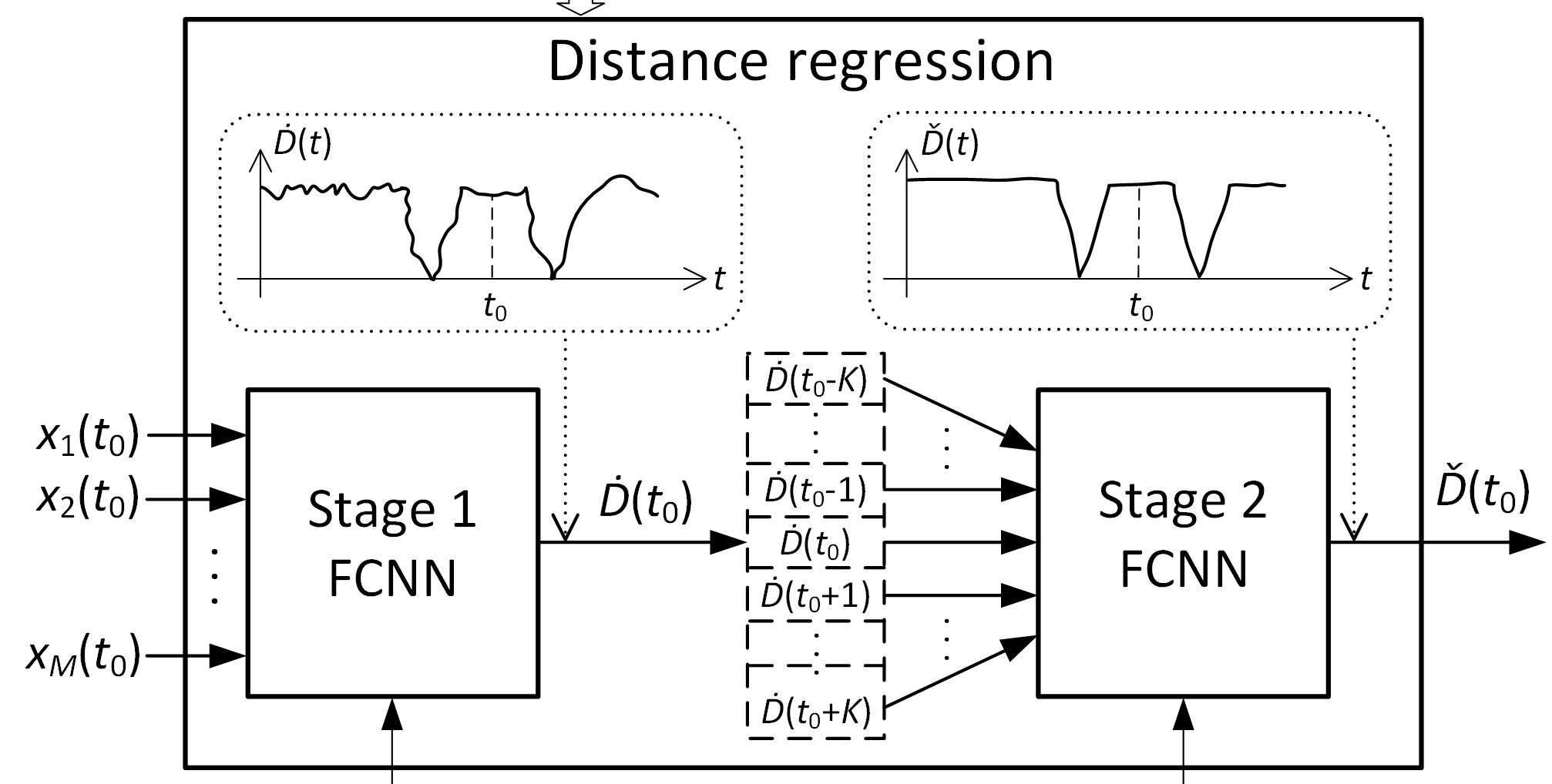

Neural Network-based Acoustic Vehicle Counting

Slobodan Djukanović, Yash Patel, Jiři Matas, Tuomas Virtanen

European Signal Processing Conference (EUSIPCO), 2021

pdf abstract bibtex

Learning Surrogates via Deep Embedding

Yash Patel, Tomas Hodan, Jiri Matas

European Conference on Computer Vision (ECCV), 2020

pdf abstract bibtex video long video

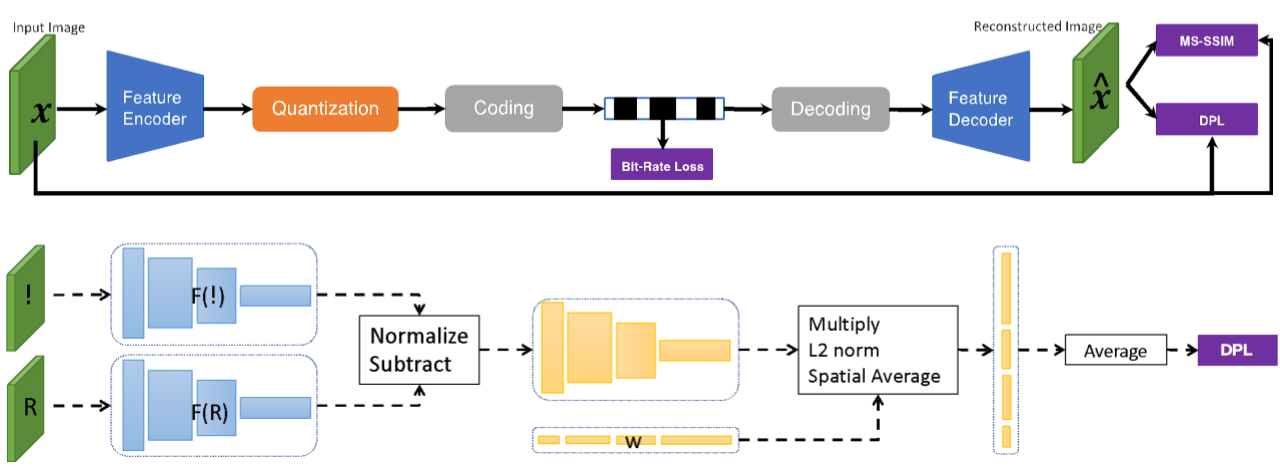

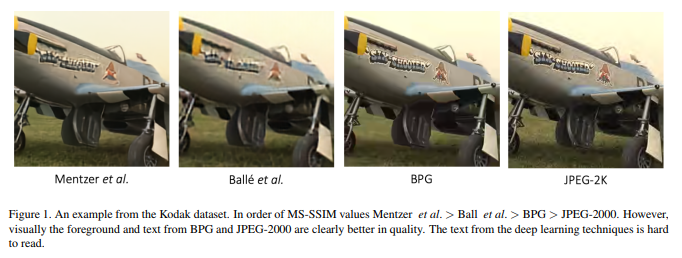

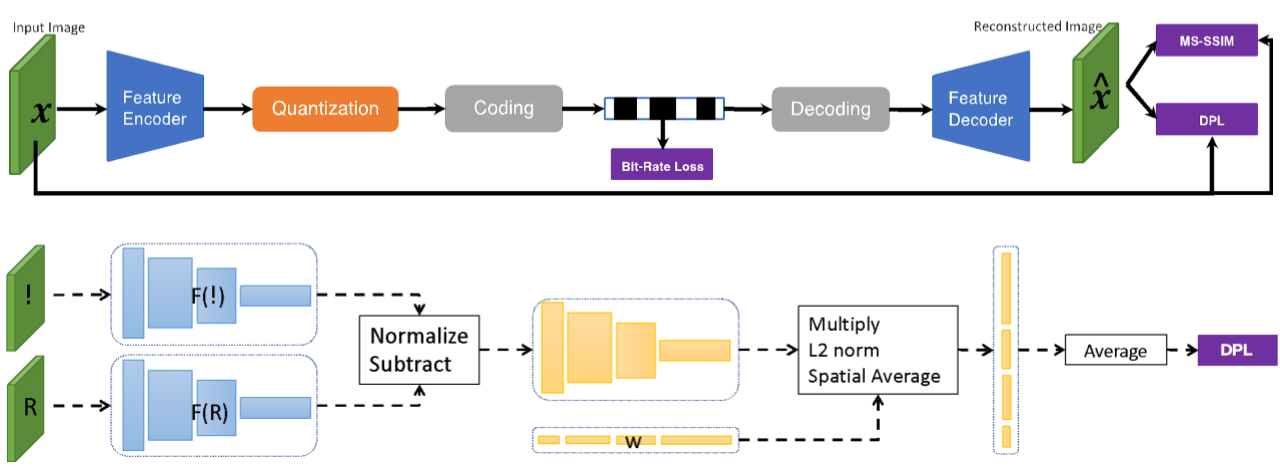

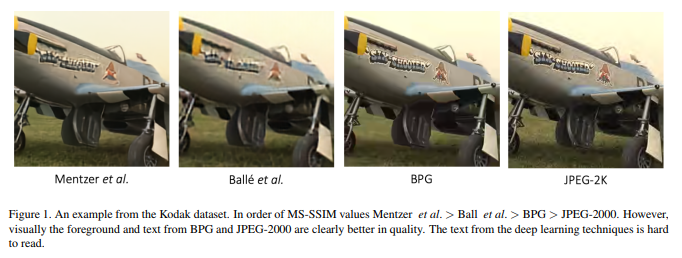

Deep Perceptual Compression

Yash Patel, Srikar Appalaraju, R. Manmatha

arXiv e-print, 2019

pdf abstract bibtex

Human Perceptual Evaluations for Image Compression

Yash Patel, Srikar Appalaraju, R. Manmatha

arXiv e-print, 2019

pdf abstract bibtex

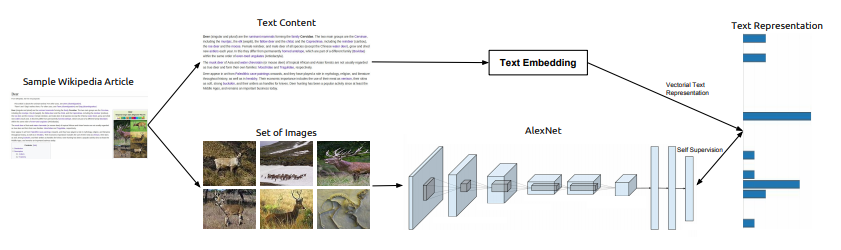

Self-Supervised Visual Representations for Cross-Modal Retrieval

Yash Patel, Lluis Gomez, Marçal Rusiñol, Dimosthenis Karatzas, C.V. Jawahar

International Conference on Multimedia Retrieval (ICMR), 2019 Spotlight

pdf abstract bibtex

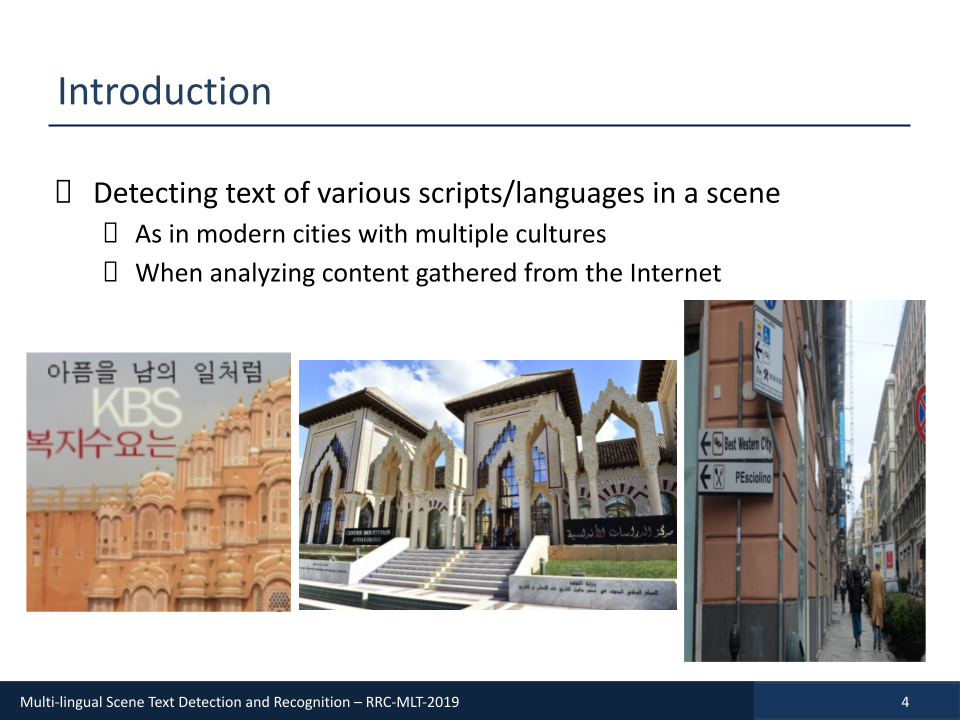

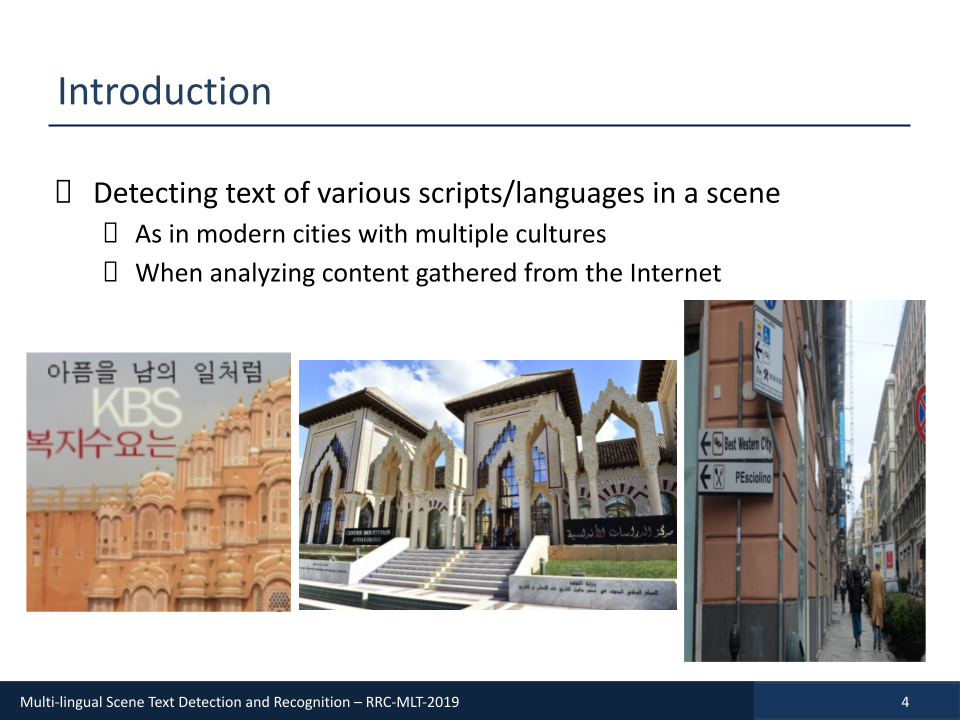

ICDAR2019 Robust Reading Challenge on Multi-lingual Scene Text Detection and Recognition--RRC-MLT-2019

Nibal Nayef*, Yash Patel*, Michal Busta, Pinaki Nath Chowdhury, Dimosthenis Karatzas, Wafa Khlif, Jiri Matas, Umapada Pal, Jean-Christophe Burie, Cheng-lin Liu, Jean-Marc Ogier

International Conference on Document Analysis and Recognition (ICDAR), 2019 Oral

pdf abstract bibtex portal

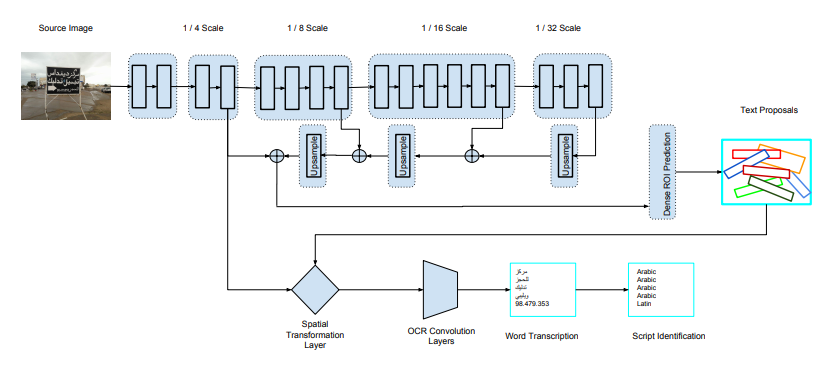

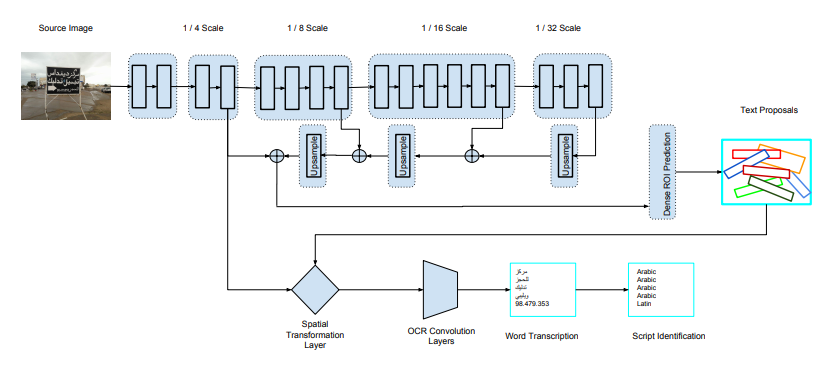

E2E-MLT - an Unconstrained End-to-End Method for Multi-Language Scene Text

Michal Bušta, Yash Patel, Jiri Matas

International Workshop on Robust Reading, Asian Conference on Computer Vision (ACCV), 2018

Best Paper Award

pdf abstract bibtex code

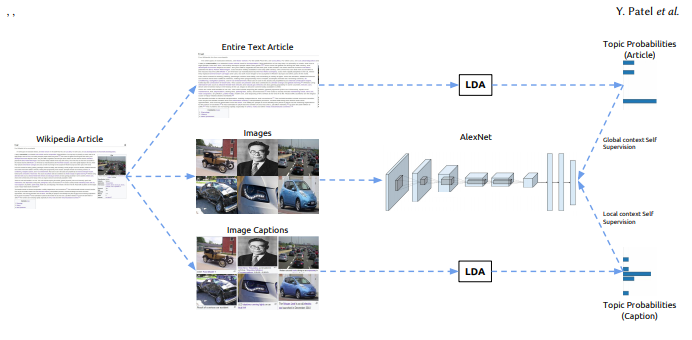

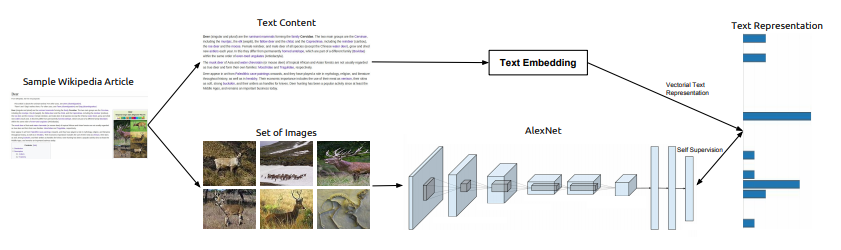

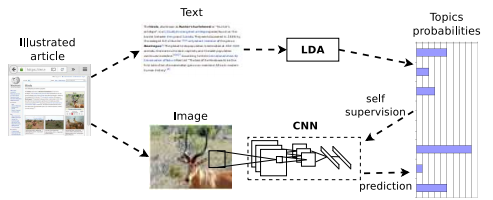

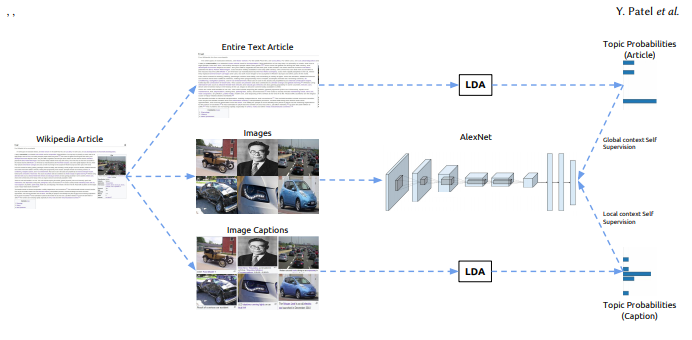

TextTopicNet-Self-Supervised Learning of Visual Features Through Embedding Images on Semantic Text Spaces

Yash Patel, Lluis Gomez, Raul Gomez, Marçal Rusiñol, Dimosthenis Karatzas, C.V. Jawahar

Under Review at Pattern Recognition Journal, arXiv e-print, 2018

pdf abstract bibtex code

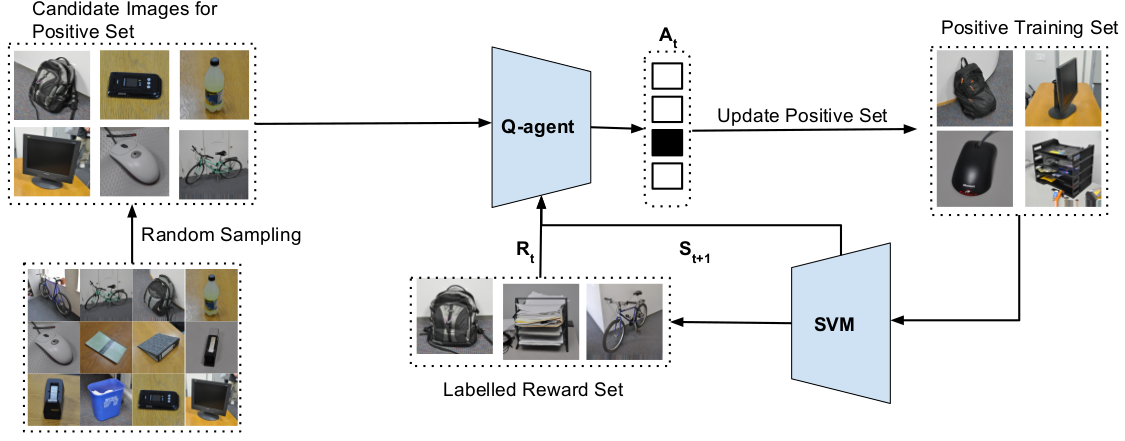

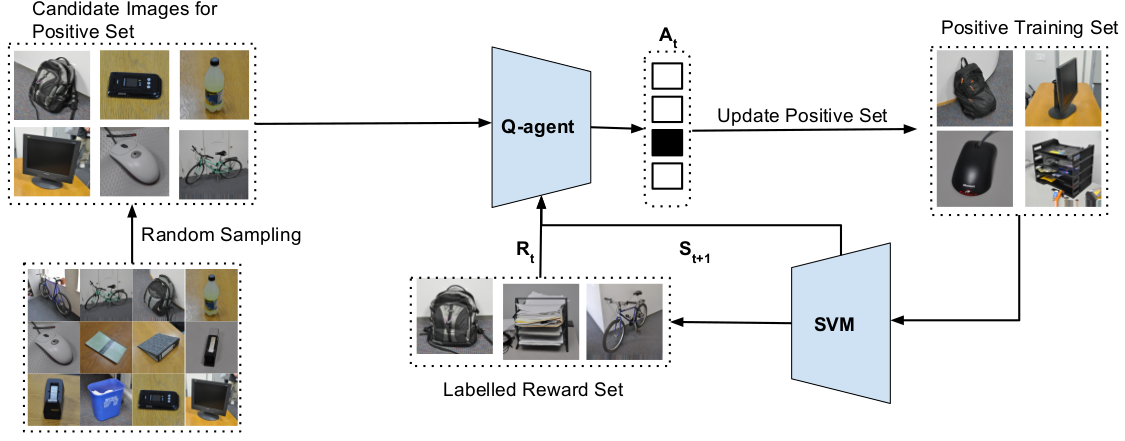

Learning Sampling Policies for Domain Adaptation

Yash Patel*, Kashyap Chitta*, Bhavan Jasani*

ArXiv e-prints, 2018

pdf abstract bibtex code

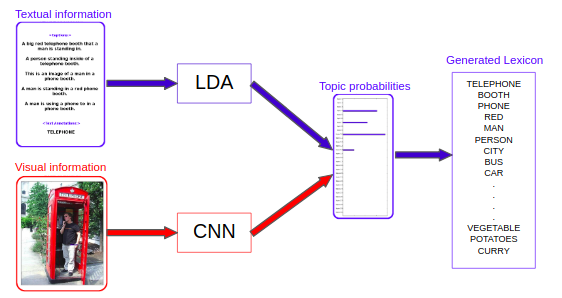

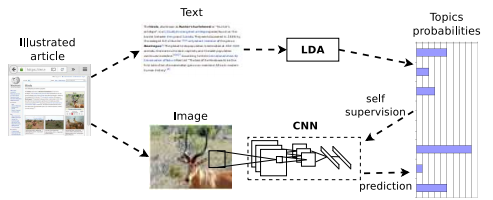

Self-Supervised Learning of Visual Features through Embedding Images into Text Topic Spaces

Lluis Gomez*, Yash Patel*, Marçal Rusiñol, Dimosthenis Karatzas, CV Jawahar

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2017

pdf abstract bibtex code

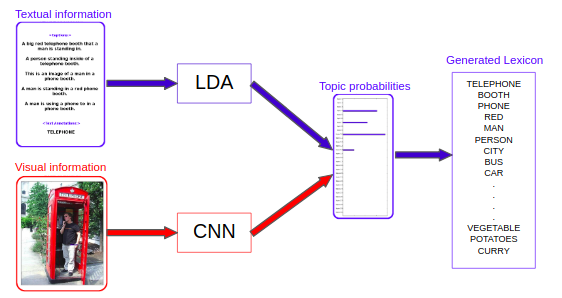

Dynamic Lexicon Generation for Natural Scene Images

Yash Patel, Lluis Gomez, Marçal Rusiñol, Dimosthenis Karatzas

International Workshop on Robust Reading, European Conference on Computer Vision (ECCV), 2016

pdf abstract bibtex code

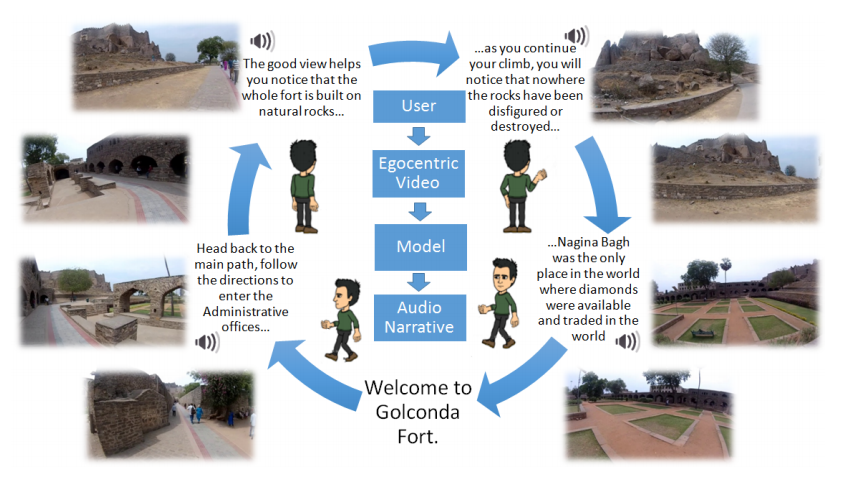

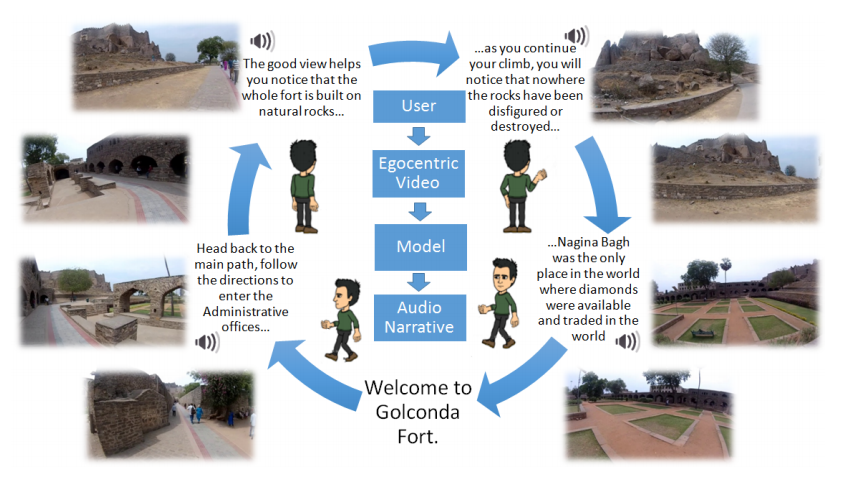

Dynamic Narratives for Heritage Tour

Anurag Ghosh*, Yash Patel*, Mohak Sukhwani, CV Jawahar

VisART, European Conference on Computer Vision (ECCV), 2016

pdf abstract bibtex code

Patents

- (2024) Statistical model training systems, US Patent 11,868,440, Yash Patel, R Manmatha, Alexander Smola, Son D Tran, Sheng Zha.

- (2021) Hierarchical auto-regressive image compression system, US Patent 10,965,948, Srikar Appalaraju, Yash Patel, R Manmatha.

- (2021) Learned lossy image compression codec, US Patent 10,909,728, Srikar Appalaraju, R Manmatha, Yash Patel.

Academic Services

Reviewer for TPAMI, IJCV, ICPR, ACCV, WACV, ICCV, CVPR

- (2023) Organizer, DocILE Lab and Challenge, International Conference on Document Analysis and Recognition (ICDAR).

- (2023) Organizer, DocILE Lab and Challenge, Conference and Labs of the Evaluation Forum (CLEF).

- (2019) Organizer, MLT Competition, International Conference on Document Analysis and Recognition (ICDAR).

- (2019) Organizer, tutorial on Joint Image-Text Embedding Learning and applications, ICDAR.

- (2018) Organizer/Program Chair, 3rd International Workshop on Robust Reading (IWRR), ACCV 2018.

Awards

- (2024) Antonín Svoboda Award for the Best Ph.D. Thesis.

- (2024) Dean's Award, FEL CVUT for outstanding Ph.D. dissertation.

- (2021) Amazon Research Award, for Training Neural Networks on Non-Differentiable Losses (with Prof. Jiri Matas).

- (2018) Best Paper Award, to E2E-MLT at IWRR ACCV.

- (2017) Open Informatics Young Scientist Scholarship, Czech Technical University in Prague.

- (2017) Won (Rank-1) ICDAR RRC-MLT, competition on cropped word script identification.

- (2017) Dean's Research Award, IIIT Hyderabad, for excellence in research.

- (2016) Dean's Research Award, IIIT Hyderabad, for excellence in research.

- (2016) Dean's Academic Award, IIIT Hyderabad, for merit list.

Talks

Present & Past Affiliations